I am a Machine Learning Researcher with a PhD in AI for Medicine, specializing in deep learning for ECG analysis. My work focuses on explainable AI, robustness, and performance metrics in biomedical signal processing. I am passionate about interdisciplinary research at the intersection of AI and healthcare, bridging theoretical advancements with real-world applications.

Computers in biology and medicine 141, 105114

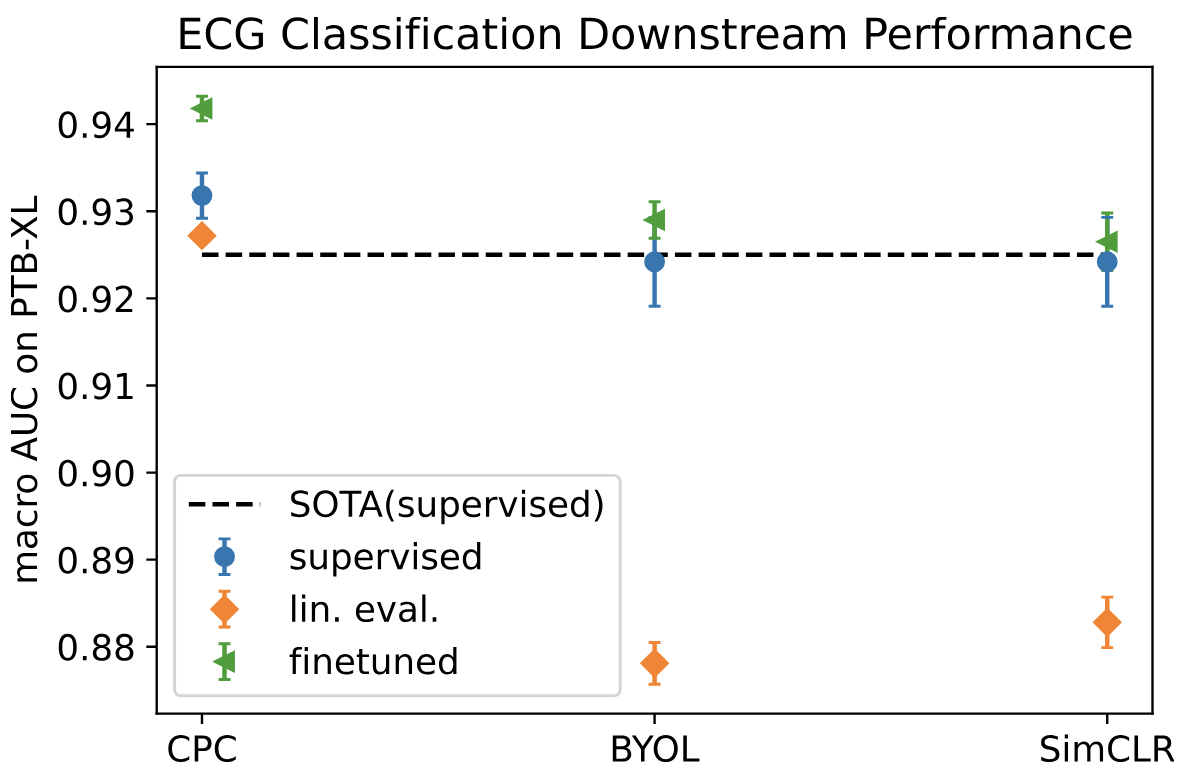

In this work, we explore self-supervised learning (SSL) for ECG analysis to learn meaningful representations without requiring labeled data. By pretraining on large-scale ECG datasets, our approach surpasses convolutional architectures in both supervised and self-supervised settings, achieving state-of-the-art performance. We demonstrate that SSL can enhance generalization, robustness, and data efficiency in ECG classification tasks, paving the way for more scalable and interpretable AI in cardiology.

Scientific data 10 (1), 279

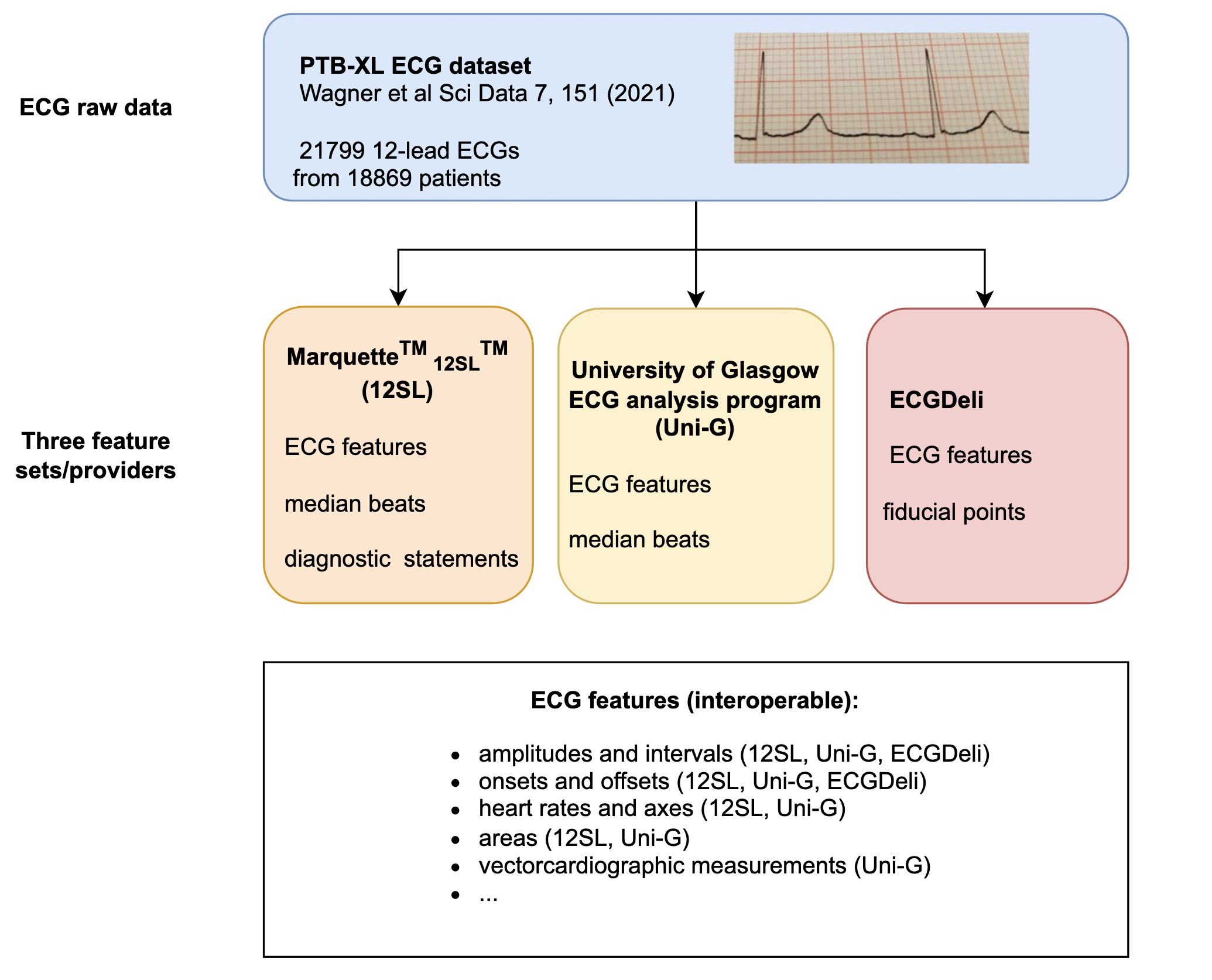

The PTB-XL+ dataset extends the PTB-XL ECG dataset, providing a comprehensive set of precomputed features derived from 12-lead electrocardiograms (ECGs). This dataset includes morphological, spectral, and deep learning-based representations, facilitating research in explainable AI, model robustness, and ECG classification. By offering structured and interpretable feature sets, PTB-XL+ supports both traditional machine learning and deep learning approaches for cardiac signal analysis.

Computers in Biology and Medicine 176, 108525

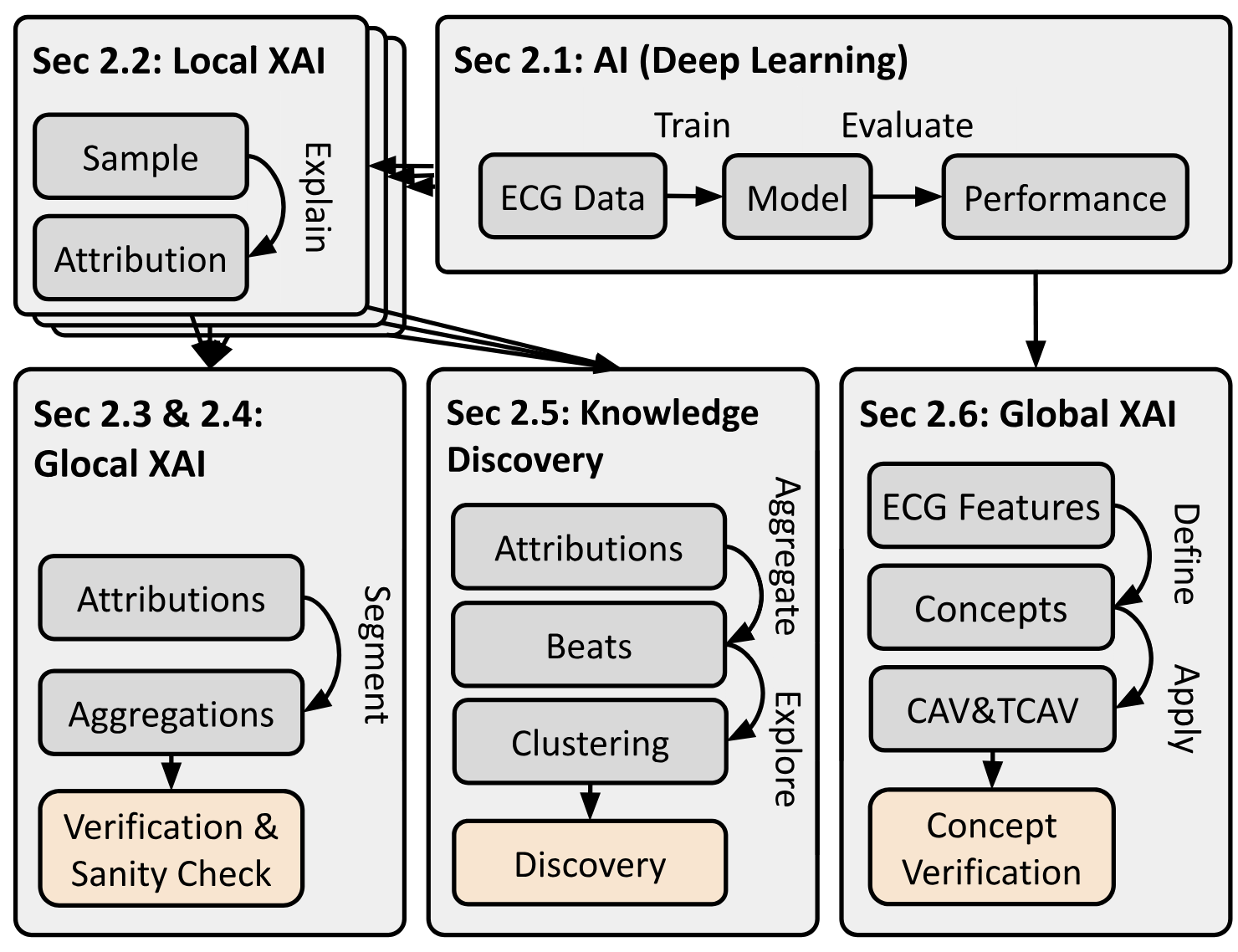

This work explores methods to interpret and audit deep learning models for electrocardiogram (ECG) analysis. The study introduces a structured framework that integrates concept-based AI, segmentation-based approaches, and discovery-driven methods to enhance model explainability:

By combining these approaches, the paper provides tools for auditing model decisions, detecting biases, and improving the transparency of AI systems in healthcare.

IEEE Journal of Biomedical and Health Informatics

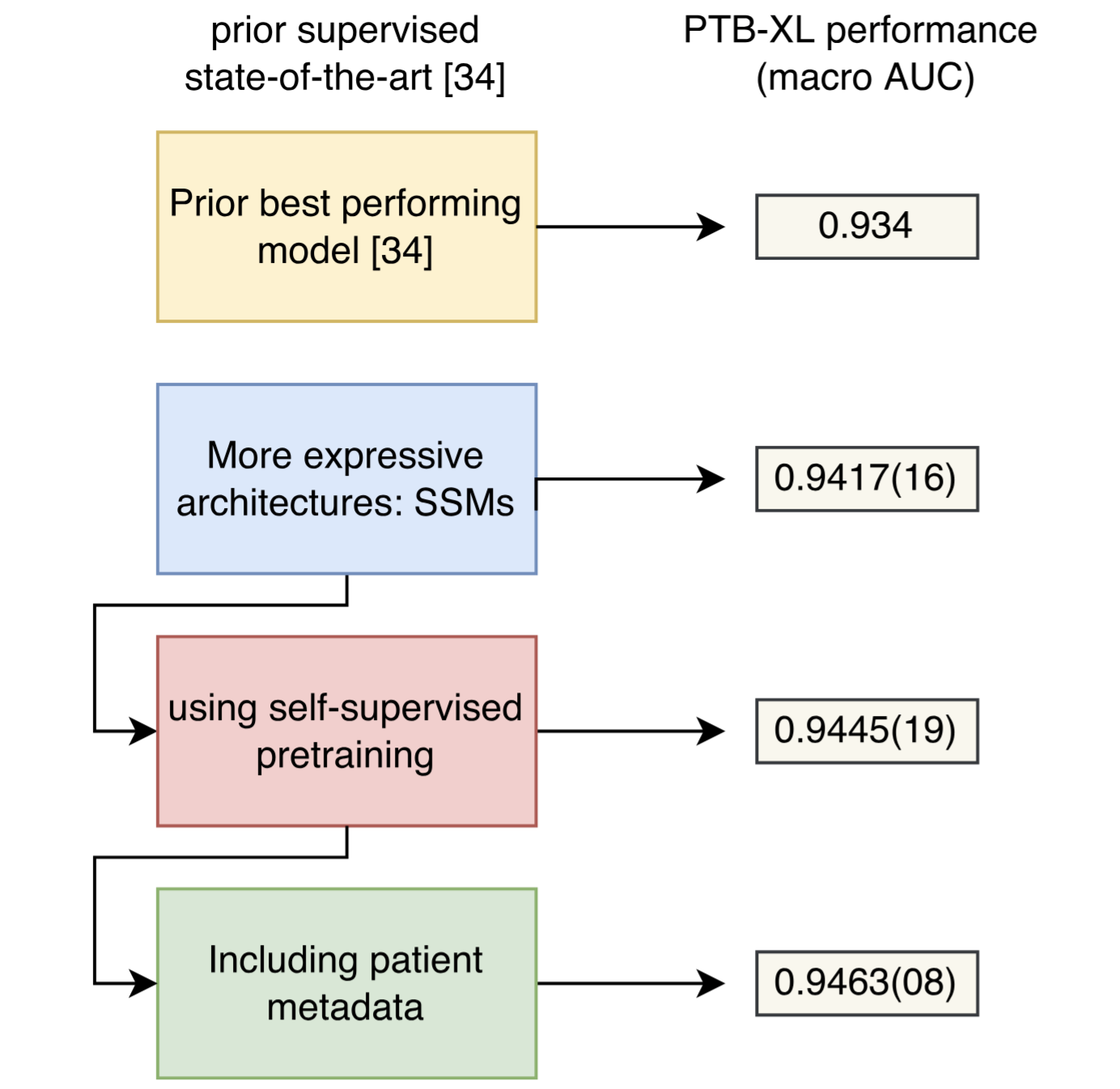

In this work, we introduce a novel framework that integrates structured state space models (SSMs), self-supervised learning, and patient metadata to advance the precision of ECG-based predictions. Our approach challenges conventional convolutional architectures by leveraging SSMs, which are inherently suited for capturing long-range dependencies in physiological signals. We further enhance model performance through self-supervised pretraining, enabling more data-efficient and generalizable feature extraction. Additionally, we incorporate patient-specific metadata to refine predictions, reducing biases and improving clinical relevance.

IEEE Journal of Biomedical and Health Informatics

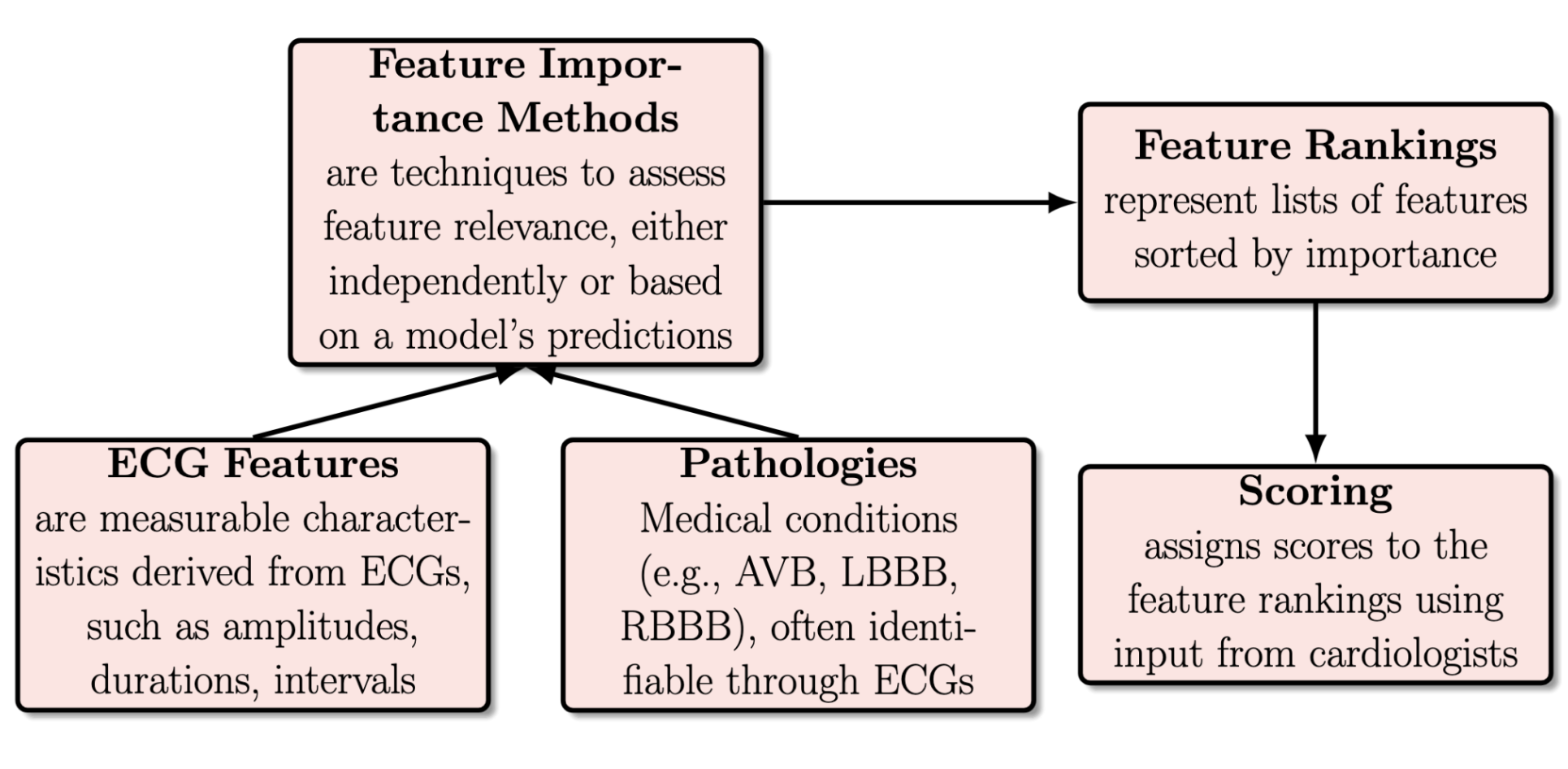

Interpretable AI is crucial for deploying deep learning models in clinical settings, yet discrepancies often arise between algorithmic and human reasoning. In this study, we systematically compare feature importance rankings derived from state-of-the-art machine learning models with those provided by expert cardiologists for ECG classification tasks. Our analysis reveals that while algorithms excel at detecting complex patterns, they sometimes emphasize features that lack clinical significance. Conversely, cardiologists prioritize features grounded in physiological understanding, which may not always align with data-driven importance rankings. By bridging this gap, we highlight the strengths and limitations of both approaches and propose strategies for improving AI-assisted ECG diagnostics. This work underscores the need for human-AI collaboration in medical decision-making, ensuring that model predictions align with expert knowledge to enhance trust and clinical utility.